Examining DALL-E, Midjourney and Stable Diffusion - and the implications for games development.

AI-generated imagery is here.

But how could this affect video games?

Modern titles are extremely art-intensive, requiring countless pieces of texture and concept art.

If developers could harness this tech, perhaps the speed and quality of asset generation could radically increase.

I tried three of the leading AI generators: DALL-E 2, Stable Diffusion, and Midjourney.

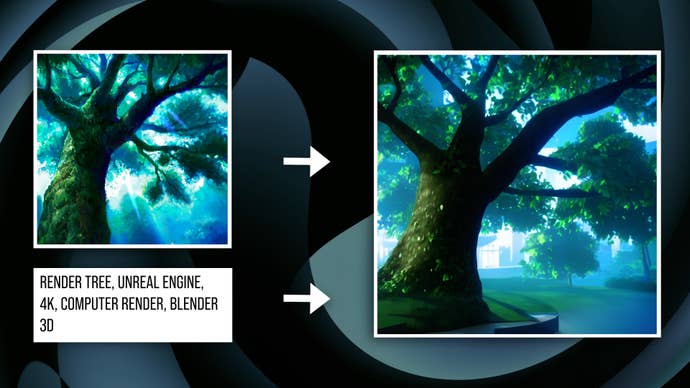

At the moment, the default way of using AI image generators is through something called ‘prompting’.

Right now this is only available in Stable Diffusion.

On some level, the AI is ‘learning’ how to create art with superhuman versatility and speed.

The positive implications for the video game industry are numerous.

For example, remasters are becoming ever-more common.

However, older titles come saddled with technical baggage.

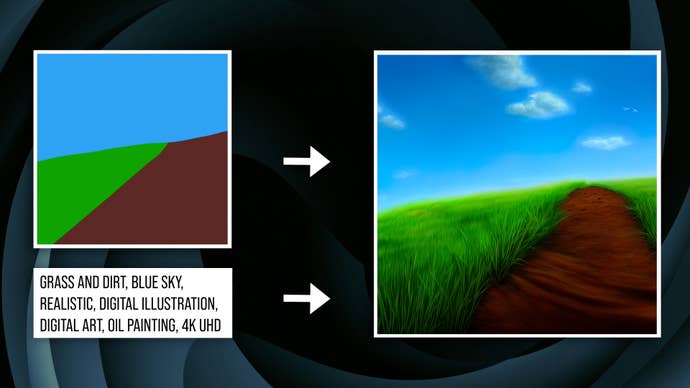

But what if we generated all-new assets instead of merely trying to add detail?

That’s where AI image generation comes in.

Take the Chrono Cross remaster, for example.

There are other examples in the video above.

You could, of course, apply the same techniques to creating original assets for games.

The possibilities here seem virtually endless.

Stable Diffusion also has an option to generate tileable images, which should help with creating textures.

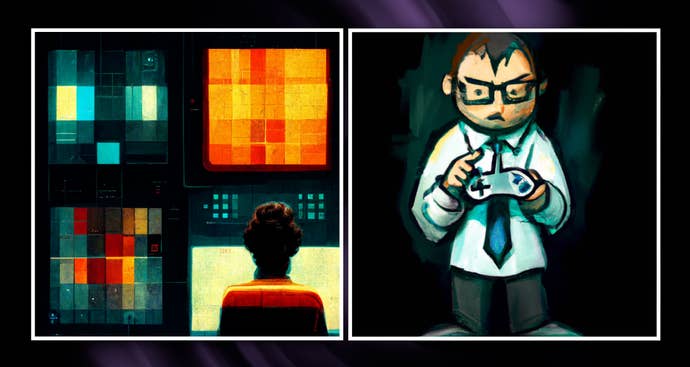

I can see these tools being used earlier in the production process as well.

During development, studios need countless pieces of concept art.

Plug in a few parameters and you might easily generate hundreds of examples to work from.

Key concept art techniques translate to these AI workflows too.

By feeding the AI a base image to guide composition, we can do the exact same thing.

Impressive results are achievable but it is important to stress that current AI models are hardly infallible.

Different subject areas in commercial artwork tend to use different techniques and this gets reflected in the AI outputs.

To generate consistent looking imagery, you should probably carefully engineer your prompts.

And even still, getting something like what you’re looking for requires some cherry-picking.

AI art does seem like a very useful tool, but it does have its limits at the moment.

A few minor touch-ups can eliminate any distracting AI artifacts or errors.

I’d expect a potential ‘DALL-E 3’ or ‘Stabler Diffusion’ to deliver more compelling and consistent results.

Clearly these products work well right now though, so which is the best option?

In terms of quality, DALL-E 2 is very capable of interpreting abstract inputs and generating creative results.

Stable Diffusion tends to require much more hand-holding.

The big advantage of Stable Diffusion is its image prompting mode, which is very powerful.

In my opinion, it’s the worst of the three.

Stable Diffusion on the other hand is totally free and open-source.

This wouldn’t be Digital Foundry without some performance analysis.

Running Stable Diffusion locally produces variable results, depending on your hardware and the quality level of the output.

Using more advanced samplers can also drive up the generation time.

Based on my experiences, AI image generation is a stunning, disruptive technology.

punch in some words in, and get a picture out.

The most significant barrier at this point is pricing.

Exactly how far will these tools go?